Conditional Average Treatment Effects (CATE) with DoWhy and EconML

This is an experimental feature where we use EconML methods from DoWhy. Using EconML allows CATE estimation using different methods.

All four steps of causal inference in DoWhy remain the same: model, identify, estimate, and refute. The key difference is that we now call econml methods in the estimation step. There is also a simpler example using linear regression to understand the intuition behind CATE estimators.

All datasets are generated using linear structural equations.

[1]:

%load_ext autoreload

%autoreload 2

[2]:

import numpy as np

import pandas as pd

import logging

import dowhy

from dowhy import CausalModel

import dowhy.datasets

import econml

import warnings

warnings.filterwarnings('ignore')

BETA = 10

[3]:

data = dowhy.datasets.linear_dataset(BETA, num_common_causes=4, num_samples=10000,

num_instruments=2, num_effect_modifiers=2,

num_treatments=1,

treatment_is_binary=False,

num_discrete_common_causes=2,

num_discrete_effect_modifiers=0,

one_hot_encode=False)

df=data['df']

print(df.head())

print("True causal estimate is", data["ate"])

X0 X1 Z0 Z1 W0 W1 W2 W3 v0 \

0 1.025165 1.245504 1.0 0.158014 -0.646130 1.585682 0 2 20.257835

1 -0.565363 -0.509983 1.0 0.719249 -0.528081 -0.126231 1 1 25.621852

2 -1.066614 0.258180 1.0 0.117570 -1.291546 1.744535 3 2 21.305156

3 -1.997311 0.759307 1.0 0.092892 1.002630 1.813077 2 0 15.028699

4 -0.827012 0.411718 1.0 0.979072 -1.223523 -0.505201 3 2 32.457489

y

0 293.381658

1 206.815987

2 204.213489

3 148.346209

4 329.822796

True causal estimate is 11.21529604289463

[4]:

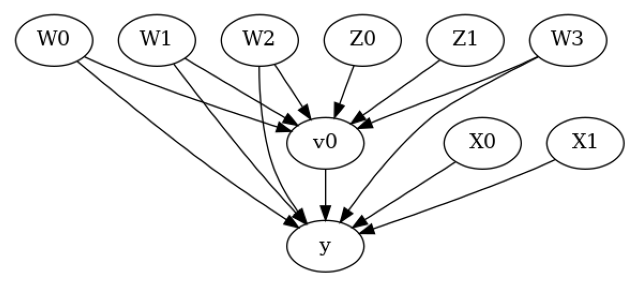

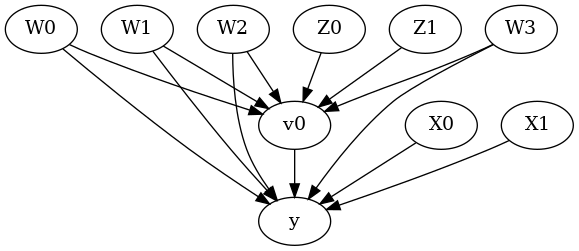

model = CausalModel(data=data["df"],

treatment=data["treatment_name"], outcome=data["outcome_name"],

graph=data["gml_graph"])

[5]:

model.view_model()

from IPython.display import Image, display

display(Image(filename="causal_model.png"))

[6]:

identified_estimand= model.identify_effect(proceed_when_unidentifiable=True)

print(identified_estimand)

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W3,W0,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W0,W2,W1,U) = P(y|v0,W3,W0,W2,W1)

### Estimand : 2

Estimand name: iv

Estimand expression:

⎡ -1⎤

⎢ d ⎛ d ⎞ ⎥

E⎢─────────(y)⋅⎜─────────([v₀])⎟ ⎥

⎣d[Z₁ Z₀] ⎝d[Z₁ Z₀] ⎠ ⎦

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

### Estimand : 3

Estimand name: frontdoor

No such variable(s) found!

Linear Model

First, let us build some intuition using a linear model for estimating CATE. The effect modifiers (that lead to a heterogeneous treatment effect) can be modeled as interaction terms with the treatment. Thus, their value modulates the effect of treatment.

Below the estimated effect of changing treatment from 0 to 1.

[7]:

linear_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.linear_regression",

control_value=0,

treatment_value=1)

print(linear_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W3,W0,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W0,W2,W1,U) = P(y|v0,W3,W0,W2,W1)

## Realized estimand

b: y~v0+W3+W0+W2+W1+v0*X0+v0*X1

Target units: ate

## Estimate

Mean value: 11.215317388184836

EconML methods

We now move to the more advanced methods from the EconML package for estimating CATE.

First, let us look at the double machine learning estimator. Method_name corresponds to the fully qualified name of the class that we want to use. For double ML, it is “econml.dml.DML”.

Target units defines the units over which the causal estimate is to be computed. This can be a lambda function filter on the original dataframe, a new Pandas dataframe, or a string corresponding to the three main kinds of target units (“ate”, “att” and “atc”). Below we show an example of a lambda function.

Method_params are passed directly to EconML. For details on allowed parameters, refer to the EconML documentation.

[8]:

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LassoCV

from sklearn.ensemble import GradientBoostingRegressor

dml_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.dml.DML",

control_value = 0,

treatment_value = 1,

target_units = lambda df: df["X0"]>1, # condition used for CATE

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=False)},

"fit_params":{}})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W3,W0,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W0,W2,W1,U) = P(y|v0,W3,W0,W2,W1)

## Realized estimand

b: y~v0+W3+W0+W2+W1 | X0,X1

Target units: Data subset defined by a function

## Estimate

Mean value: 13.400085811212945

Effect estimates: [[14.41337741]

[13.45208362]

[ 9.67842125]

[15.84879707]

[16.39074825]

[16.16546261]

[10.04173632]

[12.392637 ]

[16.00831856]

[13.11585615]

[14.21585079]

[13.34411671]

[13.38717392]

[10.18190179]

[ 8.83985949]

[12.51638494]

[15.11784384]

[15.09097619]

[14.76175268]

[15.07356089]

[12.58452803]

[13.64744088]

[15.48955572]

[10.18803306]

[14.17902343]

[12.79548303]

[10.51628739]

[11.46554612]

[15.87878077]

[18.0918523 ]

[ 8.74436303]

[14.98557437]

[15.09756584]

[15.20404112]

[14.54767731]

[ 9.14285816]

[11.99663704]

[13.31068132]

[16.43717315]

[11.94656252]

[17.44163277]

[13.56318253]

[14.90106202]

[13.44628869]

[14.31036337]

[14.63223487]

[ 8.47988136]

[11.0825666 ]

[10.57322194]

[10.3972848 ]

[10.86568551]

[20.53428459]

[13.73907379]

[14.50174931]

[13.84980577]

[14.15233793]

[18.33282276]

[11.13899534]

[17.06769622]

[13.00898853]

[15.15009375]

[13.15481617]

[12.50464437]

[16.93122746]

[11.27981141]

[12.39248457]

[12.91086842]

[15.5281245 ]

[12.07124884]

[12.90627641]

[12.84591485]

[15.18726478]

[11.20822412]

[14.14073065]

[ 8.81790682]

[14.06859984]

[13.02120845]

[11.50668069]

[14.95256833]

[ 6.78712459]

[13.03812017]

[14.41052934]

[14.07226912]

[14.68199158]

[12.64864438]

[13.31401499]

[13.89272844]

[12.18193531]

[14.5529627 ]

[13.98380899]

[13.78405183]

[11.40377371]

[12.42506699]

[ 9.83484561]

[16.02691817]

[13.15026407]

[16.46287275]

[11.67117284]

[13.37407643]

[13.61615908]

[18.36646545]

[10.95921876]

[13.80927697]

[16.73315899]

[17.17030005]

[13.50096417]

[12.74199303]

[16.47474752]

[13.542255 ]

[ 9.10791634]

[11.2593795 ]

[10.09279146]

[16.88948602]

[14.96335132]

[15.07874318]

[13.6301459 ]

[15.32550901]

[ 9.93667041]

[14.43707102]

[15.5669185 ]

[18.14320223]

[14.6346842 ]

[ 9.82085651]

[12.71803559]

[16.54207524]

[12.31964009]

[13.50705806]

[10.45574637]

[21.5592788 ]

[10.42703168]

[11.69387669]

[11.81551553]

[12.80492439]

[14.72744379]

[ 9.9063668 ]

[16.48767018]

[14.60812153]

[11.90293374]

[13.41045863]

[17.85508012]

[13.87815302]

[15.89064232]

[17.90918121]

[13.57726308]

[14.38815135]

[12.89338549]

[14.3686385 ]

[18.59858583]

[12.54476323]

[12.69905901]

[12.65343849]

[14.82416735]

[16.3352138 ]

[15.5470543 ]

[16.09051742]

[17.385081 ]

[12.58742314]

[14.2053103 ]

[10.0437625 ]

[ 9.69335775]

[11.51676228]

[13.19689822]

[15.31093056]

[ 8.4481793 ]

[12.38172618]

[13.25988639]

[10.50491595]

[14.8305913 ]

[ 9.56029413]

[16.04675916]

[15.09160654]

[12.56965812]

[ 8.3232272 ]

[12.92282259]

[14.51566664]

[14.18262536]

[12.09914092]

[14.33119745]

[20.04525352]

[10.99669971]

[10.55409654]

[11.67120367]

[12.53018791]

[16.47125356]

[11.52567171]

[13.90812103]

[16.78956482]

[11.20783788]

[16.61042455]

[11.97521923]

[13.52271377]

[15.49385039]

[12.56346108]

[13.0530413 ]

[10.60052805]

[ 9.72603956]

[ 9.03875646]

[11.06586262]

[15.55278984]

[13.71442026]

[19.52101051]

[11.9109374 ]

[15.92200957]

[10.49165631]

[14.37346364]

[14.27997062]

[10.02911428]

[11.34525411]

[14.92627595]

[14.91296044]

[13.72963782]

[17.7199091 ]

[13.18320546]

[10.8968137 ]

[10.96409634]

[14.51651115]

[14.79094898]

[16.72584023]

[12.69836832]

[16.48931119]

[14.13513952]

[15.73944018]

[14.68572758]

[15.46309336]

[14.58933741]

[16.76432502]

[13.51774498]

[14.6287051 ]

[ 9.78612228]

[ 9.40106032]

[15.04742566]

[15.89985993]

[ 9.58863793]

[16.34138122]

[13.03252799]

[14.5297528 ]

[11.86024631]

[12.47160356]

[12.29749863]

[13.97385837]

[10.48923826]

[13.45809414]

[12.38237918]

[13.11799869]

[13.57485752]

[13.40024798]

[ 9.28424025]

[12.91042994]

[12.7289918 ]

[12.48521919]

[14.66183582]

[16.51920076]

[12.64208517]

[16.36460441]

[ 8.41660125]

[14.76368849]

[15.83509106]

[17.04390988]

[14.91573496]

[21.47961509]

[16.50403127]

[17.15679147]

[16.70374265]

[15.52951657]

[ 9.99020825]

[ 9.77055498]

[13.56787276]

[ 9.88344095]

[12.15491401]

[16.4902649 ]

[11.95517911]

[10.57286709]

[17.10950069]

[15.56326679]

[17.53730905]

[13.80063482]

[15.90565141]

[13.88400631]

[14.21087964]

[15.80127732]

[13.61175948]

[11.65852426]

[ 8.25773812]

[15.11814908]

[15.68103653]

[17.72805424]

[ 6.32178689]

[15.70834008]

[14.57717647]

[12.17371891]

[14.33186331]

[16.43440738]

[11.52771966]

[11.29647228]

[11.6056564 ]

[ 6.8630355 ]

[14.41492587]

[17.08958415]

[13.94961749]

[13.40886211]

[16.54486727]

[14.75960015]

[11.89227108]

[16.82178666]

[12.35864393]

[ 9.82946438]

[14.7816892 ]

[13.03667245]

[14.30183343]

[10.63765727]

[11.45832266]

[12.64146284]

[13.58928047]

[17.62304795]

[15.38949 ]

[14.29218421]

[15.27531557]

[19.66501184]

[11.80225157]

[15.54483666]

[12.50204528]

[15.5437396 ]

[12.28643236]

[15.87359038]

[13.73245908]

[15.68021167]

[10.65071122]

[14.52491257]

[11.30059346]

[11.77867074]

[13.11114145]

[14.06740672]

[12.51908156]

[11.71672037]

[15.89180044]

[ 9.1963344 ]

[15.55308372]

[10.55174686]

[12.54730035]

[17.28753057]

[16.69954967]

[15.29824505]

[13.26437242]

[15.76296939]

[13.76209767]

[12.84431516]

[11.0749699 ]

[17.63205085]

[10.78509038]

[ 7.38817402]

[ 9.22912571]

[12.59340671]

[17.31689913]

[11.67668535]

[16.23071242]

[ 7.78490401]

[12.81883579]

[16.53504483]

[13.41794654]

[12.23674308]

[12.66953706]

[13.26388278]

[12.00506621]

[11.02455723]

[12.93963959]

[10.64586103]

[14.56912265]

[13.32454351]

[13.94956744]

[15.07847568]

[15.23535075]

[18.11264864]

[ 8.93184698]

[12.32715505]

[13.1272663 ]

[12.43301045]

[11.95663602]

[10.81584565]

[11.98783773]

[11.81567241]

[12.99614168]

[16.36865701]

[10.71955364]

[16.8742704 ]

[17.66425513]

[10.92596272]

[13.09021751]

[11.73653821]

[10.42387188]

[11.70665676]

[13.52916276]

[16.07179482]

[19.38342031]

[19.61045803]

[14.20121463]

[ 9.13172703]

[10.04600339]

[12.75382271]

[10.72172365]

[15.00272697]

[15.66468479]

[ 9.88368608]

[12.2277181 ]

[14.38938764]

[12.15231012]

[10.24137184]

[10.57926107]

[14.73141485]

[14.20050238]

[ 8.34009195]

[13.0077434 ]

[13.4300423 ]

[13.65538326]

[10.07857839]

[13.59044222]

[12.24857957]

[12.28191666]

[15.43694785]

[17.12399905]

[17.03071137]

[ 8.87991817]

[14.01978119]

[14.19531736]

[16.83964542]

[14.45899868]

[10.64353334]

[14.42719519]

[15.33177051]

[11.18547155]

[11.32399982]

[14.14648562]

[15.55969888]

[12.15754117]

[11.64281513]

[14.7362755 ]

[15.54775826]

[14.20715003]

[13.66860734]

[13.8545196 ]

[10.23843195]

[14.14110912]

[14.26059522]

[12.99035918]

[14.88705697]

[15.59890373]

[14.72828859]

[ 9.40664138]

[13.43811157]

[ 8.98659448]

[14.23736189]

[15.65105117]

[13.80532108]

[11.99448378]

[11.559723 ]

[14.8227962 ]

[11.15762993]

[ 7.7040367 ]

[ 8.9651855 ]

[11.60828231]

[10.37424201]

[ 8.54916209]

[12.54416794]

[11.2945863 ]

[13.64565189]

[17.36416223]

[11.54416661]

[15.31478916]

[14.61696206]

[15.16171365]

[12.99637801]

[14.11921869]

[13.68676579]

[15.98757357]

[14.29337105]

[12.78573241]

[15.7691394 ]

[12.12278592]

[14.7159923 ]

[10.31779145]

[13.80711542]

[12.1530812 ]

[15.62998765]

[12.82376356]

[14.34071579]

[13.5571853 ]

[ 9.03121804]

[11.26409457]

[ 7.80334612]

[ 9.90253608]

[13.37312509]

[12.13791745]

[10.19848748]

[13.02156751]

[15.15583573]

[17.83052497]

[ 9.95227128]

[13.20782227]

[12.18237725]

[14.36022382]

[11.57270217]

[13.44747548]

[14.68849842]

[13.50440349]

[10.60567806]

[10.10526632]

[14.17294076]

[15.26874475]

[ 8.33837568]

[17.81027082]

[12.11534181]

[17.77908922]

[19.10270947]

[12.55044394]

[13.93420608]

[11.96810962]

[12.23690006]

[16.25397553]

[10.61408696]

[13.06746808]

[12.16803144]

[12.1301011 ]

[17.3297622 ]

[16.29287197]

[15.80419423]

[13.04916257]

[12.10471603]

[17.31305511]

[14.7103978 ]

[12.32687932]

[12.10986615]

[10.5009043 ]

[10.43027054]

[12.20285153]

[19.27365767]

[16.39704661]

[11.86382007]

[11.46814026]

[ 9.73225714]

[12.41385482]

[15.1499059 ]

[11.08946565]

[13.48541279]

[13.6545257 ]

[11.46785545]

[10.29439998]

[19.01404704]

[12.07670189]

[12.45038295]

[12.13498606]

[14.6452182 ]

[10.6858466 ]

[16.168307 ]

[13.77711932]

[11.03356534]

[13.58148925]

[11.31211836]

[17.66434127]

[ 9.92729955]

[13.5760964 ]

[11.61328219]

[14.05883886]

[12.80848236]

[15.98071689]

[11.03891186]

[18.68169078]

[15.35633881]

[15.16474001]

[14.52428934]

[13.63749592]

[10.69688929]

[10.93690752]

[17.20480859]

[18.22717916]

[18.68358608]

[13.60975082]

[12.14405794]

[12.4415014 ]

[ 6.61346856]

[11.89760027]

[13.02954377]

[ 9.46145992]

[13.86837999]

[14.76916954]

[10.47120922]

[11.62289438]

[16.14079144]

[17.8635965 ]

[ 8.75193039]

[17.04616381]

[10.77364687]

[13.51779684]

[12.59315588]

[13.86311994]

[13.64664501]

[13.08024746]

[10.922825 ]

[ 7.47358032]

[18.05240905]

[16.97682375]

[15.55569866]

[11.398238 ]

[17.25116913]

[15.67343553]

[11.3153447 ]

[13.33560757]

[15.09788587]

[12.51103348]

[11.78627279]

[11.52242422]

[14.92402541]

[12.85373111]

[15.66977428]

[11.63174234]

[12.29265154]

[12.92775592]

[13.17667832]

[18.80264049]

[13.25618415]

[14.02420064]

[12.70735477]

[ 9.6506812 ]

[14.46082677]

[17.28382033]

[14.05709911]

[15.56698268]

[15.13855966]

[11.78133359]

[18.90025602]

[13.28381486]

[ 9.33561014]

[11.42782727]

[13.58425169]

[13.15178339]

[ 7.1334583 ]

[16.03224103]

[16.41087279]

[14.70709722]

[12.14220567]

[14.05434745]

[17.55801705]

[12.25261577]

[13.85599218]

[14.15895287]

[13.54669033]

[13.19537512]

[14.35166689]

[14.56424357]

[11.82021112]

[ 9.98855857]

[14.89902429]

[ 7.28445938]

[ 8.13783778]

[11.95008148]

[16.0113615 ]

[13.39376324]

[17.29384646]

[12.84282823]

[11.11129524]

[12.71644104]

[15.16756256]

[11.36696178]

[11.08414033]

[12.54213119]

[14.82854189]

[11.42357735]

[10.10147258]

[11.50578 ]

[11.64410544]

[13.92321879]

[12.40542791]

[12.65213494]

[11.8805871 ]

[ 9.2656055 ]

[12.13714605]

[10.82010151]

[12.78573148]

[13.37727827]

[13.55386226]

[11.51949938]

[13.87831845]

[13.68179978]

[10.67132822]

[ 9.622749 ]

[11.16798743]

[16.8499283 ]

[ 9.77243682]

[ 7.84553006]

[11.76371992]

[13.55532887]

[14.99092584]

[11.19222669]

[11.0728423 ]

[12.11927514]

[13.80287228]

[16.0536493 ]

[10.24959221]

[17.18737233]

[15.00604115]

[17.20228737]

[16.34554685]

[17.69682013]

[15.59127395]

[ 9.44174427]

[11.69057597]

[13.82592346]

[16.27987561]

[10.61301988]

[12.60684989]

[17.77309521]

[ 7.60060323]

[14.97179104]

[10.63016703]

[11.93321128]

[15.53686483]

[16.46217865]

[13.50742647]

[12.7862311 ]

[17.39330187]

[17.54918389]

[16.36823758]

[14.46504907]]

[9]:

print("True causal estimate is", data["ate"])

True causal estimate is 11.21529604289463

[10]:

dml_estimate = model.estimate_effect(identified_estimand, method_name="backdoor.econml.dml.DML",

control_value = 0,

treatment_value = 1,

target_units = 1, # condition used for CATE

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W3,W0,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W0,W2,W1,U) = P(y|v0,W3,W0,W2,W1)

## Realized estimand

b: y~v0+W3+W0+W2+W1 | X0,X1

Target units:

## Estimate

Mean value: 11.197045571286015

Effect estimates: [[14.45970606]

[ 7.92732219]

[ 9.21548077]

...

[13.5011984 ]

[10.93485181]

[14.24837647]]

CATE Object and Confidence Intervals

EconML provides its own methods to compute confidence intervals. Using BootstrapInference in the example below.

[11]:

from sklearn.preprocessing import PolynomialFeatures

from sklearn.linear_model import LassoCV

from sklearn.ensemble import GradientBoostingRegressor

from econml.inference import BootstrapInference

dml_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.econml.dml.DML",

target_units = "ate",

confidence_intervals=True,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final": LassoCV(fit_intercept=False),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{

'inference': BootstrapInference(n_bootstrap_samples=100, n_jobs=-1),

}

})

print(dml_estimate)

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W3,W0,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W0,W2,W1,U) = P(y|v0,W3,W0,W2,W1)

## Realized estimand

b: y~v0+W3+W0+W2+W1 | X0,X1

Target units: ate

## Estimate

Mean value: 11.149665608777859

Effect estimates: [[14.44776338]

[ 7.89687314]

[ 9.15672373]

...

[13.46868416]

[10.87034851]

[14.22330456]]

95.0% confidence interval: [[[14.47334195 7.77493804 9.08285854 ... 13.49814084 10.85713677

14.24807635]]

[[14.75982633 7.99995529 9.23683603 ... 13.72147037 10.99448359

14.51946201]]]

Can provide a new inputs as target units and estimate CATE on them.

[12]:

test_cols= data['effect_modifier_names'] # only need effect modifiers' values

test_arr = [np.random.uniform(0,1, 10) for _ in range(len(test_cols))] # all variables are sampled uniformly, sample of 10

test_df = pd.DataFrame(np.array(test_arr).transpose(), columns=test_cols)

dml_estimate = model.estimate_effect(identified_estimand,

method_name="backdoor.econml.dml.DML",

target_units = test_df,

confidence_intervals=False,

method_params={"init_params":{'model_y':GradientBoostingRegressor(),

'model_t': GradientBoostingRegressor(),

"model_final":LassoCV(),

'featurizer':PolynomialFeatures(degree=1, include_bias=True)},

"fit_params":{}

})

print(dml_estimate.cate_estimates)

[[12.12394393]

[11.56905609]

[12.33823836]

[12.32042514]

[10.97170289]

[12.26657423]

[12.50776903]

[11.52478607]

[12.16132405]

[12.14057139]]

Can also retrieve the raw EconML estimator object for any further operations

[13]:

print(dml_estimate._estimator_object)

<econml.dml.dml.DML object at 0x7fe324e3abe0>

Works with any EconML method

In addition to double machine learning, below we example analyses using orthogonal forests, DRLearner (bug to fix), and neural network-based instrumental variables.

Binary treatment, Binary outcome

[14]:

data_binary = dowhy.datasets.linear_dataset(BETA, num_common_causes=4, num_samples=10000,

num_instruments=2, num_effect_modifiers=2,

treatment_is_binary=True, outcome_is_binary=True)

# convert boolean values to {0,1} numeric

data_binary['df'].v0 = data_binary['df'].v0.astype(int)

data_binary['df'].y = data_binary['df'].y.astype(int)

print(data_binary['df'])

model_binary = CausalModel(data=data_binary["df"],

treatment=data_binary["treatment_name"], outcome=data_binary["outcome_name"],

graph=data_binary["gml_graph"])

identified_estimand_binary = model_binary.identify_effect(proceed_when_unidentifiable=True)

X0 X1 Z0 Z1 W0 W1 W2 \

0 0.489904 0.096652 1.0 0.003280 -1.212777 0.358605 0.382168

1 -0.521101 1.066729 0.0 0.303107 -0.321988 1.705521 0.646738

2 0.442225 -0.689376 1.0 0.231044 0.140888 1.820693 3.334337

3 -0.743038 -0.164462 1.0 0.102772 0.230746 1.643060 1.128348

4 0.734149 -0.261472 1.0 0.531165 -0.288206 -1.385953 0.972841

... ... ... ... ... ... ... ...

9995 -0.525539 1.269989 1.0 0.392007 1.046142 2.115656 2.279879

9996 0.497916 0.975014 1.0 0.160181 -1.181845 -1.094077 1.543141

9997 -0.026434 -1.085930 1.0 0.074459 1.233890 0.826205 0.936989

9998 -0.044771 0.242069 1.0 0.566800 0.337112 0.347759 0.106970

9999 -0.745530 1.622311 0.0 0.830792 2.201512 0.426812 -0.696532

W3 v0 y

0 -0.582397 1 1

1 0.824977 1 1

2 0.394061 1 1

3 1.140933 1 1

4 2.279420 1 1

... ... .. ..

9995 0.204119 1 1

9996 2.290268 1 1

9997 0.497400 1 1

9998 1.097935 1 1

9999 0.614704 1 1

[10000 rows x 10 columns]

Using DRLearner estimator

[15]:

from sklearn.linear_model import LogisticRegressionCV

#todo needs binary y

drlearner_estimate = model_binary.estimate_effect(identified_estimand_binary,

method_name="backdoor.econml.dr.LinearDRLearner",

confidence_intervals=False,

method_params={"init_params":{

'model_propensity': LogisticRegressionCV(cv=3, solver='lbfgs', multi_class='auto')

},

"fit_params":{}

})

print(drlearner_estimate)

print("True causal estimate is", data_binary["ate"])

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W3,W0,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W0,W2,W1,U) = P(y|v0,W3,W0,W2,W1)

## Realized estimand

b: y~v0+W3+W0+W2+W1 | X0,X1

Target units: ate

## Estimate

Mean value: 0.665623146096894

Effect estimates: [[0.66343849]

[0.64758808]

[0.66003439]

...

[0.64998401]

[0.6538818 ]

[0.64515619]]

True causal estimate is 0.2493

Instrumental Variable Method

[16]:

import keras

dims_zx = len(model.get_instruments())+len(model.get_effect_modifiers())

dims_tx = len(model._treatment)+len(model.get_effect_modifiers())

treatment_model = keras.Sequential([keras.layers.Dense(128, activation='relu', input_shape=(dims_zx,)), # sum of dims of Z and X

keras.layers.Dropout(0.17),

keras.layers.Dense(64, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dropout(0.17)])

response_model = keras.Sequential([keras.layers.Dense(128, activation='relu', input_shape=(dims_tx,)), # sum of dims of T and X

keras.layers.Dropout(0.17),

keras.layers.Dense(64, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(32, activation='relu'),

keras.layers.Dropout(0.17),

keras.layers.Dense(1)])

deepiv_estimate = model.estimate_effect(identified_estimand,

method_name="iv.econml.iv.nnet.DeepIV",

target_units = lambda df: df["X0"]>-1,

confidence_intervals=False,

method_params={"init_params":{'n_components': 10, # Number of gaussians in the mixture density networks

'm': lambda z, x: treatment_model(keras.layers.concatenate([z, x])), # Treatment model,

"h": lambda t, x: response_model(keras.layers.concatenate([t, x])), # Response model

'n_samples': 1, # Number of samples used to estimate the response

'first_stage_options': {'epochs':25},

'second_stage_options': {'epochs':25}

},

"fit_params":{}})

print(deepiv_estimate)

2023-07-23 17:44:54.124676: I tensorflow/core/util/util.cc:169] oneDNN custom operations are on. You may see slightly different numerical results due to floating-point round-off errors from different computation orders. To turn them off, set the environment variable `TF_ENABLE_ONEDNN_OPTS=0`.

2023-07-23 17:44:54.264353: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory

2023-07-23 17:44:54.264398: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

2023-07-23 17:44:59.827010: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcuda.so.1'; dlerror: libcuda.so.1: cannot open shared object file: No such file or directory

2023-07-23 17:44:59.827073: W tensorflow/stream_executor/cuda/cuda_driver.cc:269] failed call to cuInit: UNKNOWN ERROR (303)

2023-07-23 17:44:59.827119: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:156] kernel driver does not appear to be running on this host (AMSHAR-X1): /proc/driver/nvidia/version does not exist

2023-07-23 17:44:59.828007: I tensorflow/core/platform/cpu_feature_guard.cc:193] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX2 AVX512F AVX512_VNNI FMA

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

Epoch 1/25

313/313 [==============================] - 2s 4ms/step - loss: 8.7182

Epoch 2/25

313/313 [==============================] - 1s 4ms/step - loss: 3.8848

Epoch 3/25

313/313 [==============================] - 1s 4ms/step - loss: 2.7126

Epoch 4/25

313/313 [==============================] - 1s 5ms/step - loss: 2.3840

Epoch 5/25

313/313 [==============================] - 1s 4ms/step - loss: 2.3009

Epoch 6/25

313/313 [==============================] - 1s 3ms/step - loss: 2.2695

Epoch 7/25

313/313 [==============================] - 1s 3ms/step - loss: 2.2531

Epoch 8/25

313/313 [==============================] - 1s 2ms/step - loss: 2.2300

Epoch 9/25

313/313 [==============================] - 1s 3ms/step - loss: 2.2068

Epoch 10/25

313/313 [==============================] - 1s 5ms/step - loss: 2.1900

Epoch 11/25

313/313 [==============================] - 1s 5ms/step - loss: 2.1815

Epoch 12/25

313/313 [==============================] - 1s 4ms/step - loss: 2.1767

Epoch 13/25

313/313 [==============================] - 1s 2ms/step - loss: 2.1624

Epoch 14/25

313/313 [==============================] - 1s 2ms/step - loss: 2.1609

Epoch 15/25

313/313 [==============================] - 1s 2ms/step - loss: 2.1586

Epoch 16/25

313/313 [==============================] - 1s 3ms/step - loss: 2.1419

Epoch 17/25

313/313 [==============================] - 1s 5ms/step - loss: 2.1289

Epoch 18/25

313/313 [==============================] - 1s 4ms/step - loss: 2.1254

Epoch 19/25

313/313 [==============================] - 1s 2ms/step - loss: 2.1239

Epoch 20/25

313/313 [==============================] - 1s 2ms/step - loss: 2.1103

Epoch 21/25

313/313 [==============================] - 1s 2ms/step - loss: 2.1123

Epoch 22/25

313/313 [==============================] - 1s 3ms/step - loss: 2.1024

Epoch 23/25

313/313 [==============================] - 1s 5ms/step - loss: 2.0887

Epoch 24/25

313/313 [==============================] - 1s 4ms/step - loss: 2.0884

Epoch 25/25

313/313 [==============================] - 1s 2ms/step - loss: 2.0766

Epoch 1/25

313/313 [==============================] - 2s 4ms/step - loss: 17930.0625

Epoch 2/25

313/313 [==============================] - 2s 6ms/step - loss: 7088.9463

Epoch 3/25

313/313 [==============================] - 2s 6ms/step - loss: 6421.2710

Epoch 4/25

313/313 [==============================] - 1s 4ms/step - loss: 6279.4985

Epoch 5/25

313/313 [==============================] - 1s 3ms/step - loss: 5940.8018

Epoch 6/25

313/313 [==============================] - 1s 3ms/step - loss: 5967.8521

Epoch 7/25

313/313 [==============================] - 1s 4ms/step - loss: 5930.2974

Epoch 8/25

313/313 [==============================] - 2s 5ms/step - loss: 5809.0239

Epoch 9/25

313/313 [==============================] - 3s 8ms/step - loss: 5858.6870

Epoch 10/25

313/313 [==============================] - 3s 8ms/step - loss: 5987.8755

Epoch 11/25

313/313 [==============================] - 2s 7ms/step - loss: 5794.1372

Epoch 12/25

313/313 [==============================] - 2s 6ms/step - loss: 5898.4888

Epoch 13/25

313/313 [==============================] - 2s 5ms/step - loss: 5847.3872

Epoch 14/25

313/313 [==============================] - 1s 5ms/step - loss: 5818.8799

Epoch 15/25

313/313 [==============================] - 2s 6ms/step - loss: 5753.1304

Epoch 16/25

313/313 [==============================] - 2s 7ms/step - loss: 5925.1919

Epoch 17/25

313/313 [==============================] - 2s 7ms/step - loss: 5782.2402

Epoch 18/25

313/313 [==============================] - 2s 6ms/step - loss: 5768.5786

Epoch 19/25

313/313 [==============================] - 2s 7ms/step - loss: 5741.7646

Epoch 20/25

313/313 [==============================] - 2s 8ms/step - loss: 5674.4023

Epoch 21/25

313/313 [==============================] - 2s 6ms/step - loss: 5789.1982

Epoch 22/25

313/313 [==============================] - 2s 6ms/step - loss: 5838.4785

Epoch 23/25

313/313 [==============================] - 2s 6ms/step - loss: 5773.2202

Epoch 24/25

313/313 [==============================] - 2s 6ms/step - loss: 5712.6611

Epoch 25/25

313/313 [==============================] - 2s 6ms/step - loss: 5843.3638

WARNING:tensorflow:

The following Variables were used a Lambda layer's call (lambda_7), but

are not present in its tracked objects:

<tf.Variable 'dense_3/kernel:0' shape=(3, 128) dtype=float32>

<tf.Variable 'dense_3/bias:0' shape=(128,) dtype=float32>

<tf.Variable 'dense_4/kernel:0' shape=(128, 64) dtype=float32>

<tf.Variable 'dense_4/bias:0' shape=(64,) dtype=float32>

<tf.Variable 'dense_5/kernel:0' shape=(64, 32) dtype=float32>

<tf.Variable 'dense_5/bias:0' shape=(32,) dtype=float32>

<tf.Variable 'dense_6/kernel:0' shape=(32, 1) dtype=float32>

<tf.Variable 'dense_6/bias:0' shape=(1,) dtype=float32>

It is possible that this is intended behavior, but it is more likely

an omission. This is a strong indication that this layer should be

formulated as a subclassed Layer rather than a Lambda layer.

WARNING:tensorflow:

The following Variables were used a Lambda layer's call (lambda_7), but

are not present in its tracked objects:

<tf.Variable 'dense_3/kernel:0' shape=(3, 128) dtype=float32>

<tf.Variable 'dense_3/bias:0' shape=(128,) dtype=float32>

<tf.Variable 'dense_4/kernel:0' shape=(128, 64) dtype=float32>

<tf.Variable 'dense_4/bias:0' shape=(64,) dtype=float32>

<tf.Variable 'dense_5/kernel:0' shape=(64, 32) dtype=float32>

<tf.Variable 'dense_5/bias:0' shape=(32,) dtype=float32>

<tf.Variable 'dense_6/kernel:0' shape=(32, 1) dtype=float32>

<tf.Variable 'dense_6/bias:0' shape=(1,) dtype=float32>

It is possible that this is intended behavior, but it is more likely

an omission. This is a strong indication that this layer should be

formulated as a subclassed Layer rather than a Lambda layer.

224/224 [==============================] - 1s 2ms/step

224/224 [==============================] - 0s 2ms/step

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: iv

Estimand expression:

⎡ -1⎤

⎢ d ⎛ d ⎞ ⎥

E⎢─────────(y)⋅⎜─────────([v₀])⎟ ⎥

⎣d[Z₁ Z₀] ⎝d[Z₁ Z₀] ⎠ ⎦

Estimand assumption 1, As-if-random: If U→→y then ¬(U →→{Z1,Z0})

Estimand assumption 2, Exclusion: If we remove {Z1,Z0}→{v0}, then ¬({Z1,Z0}→y)

## Realized estimand

b: y~v0+W3+W0+W2+W1 | X0,X1

Target units: Data subset defined by a function

## Estimate

Mean value: 1.0939991474151611

Effect estimates: [[ 3.140396 ]

[-0.9264221]

[-0.6861267]

...

[ 2.2031708]

[-0.5570221]

[ 2.6899872]]

Metalearners

[17]:

data_experiment = dowhy.datasets.linear_dataset(BETA, num_common_causes=5, num_samples=10000,

num_instruments=2, num_effect_modifiers=5,

treatment_is_binary=True, outcome_is_binary=False)

# convert boolean values to {0,1} numeric

data_experiment['df'].v0 = data_experiment['df'].v0.astype(int)

print(data_experiment['df'])

model_experiment = CausalModel(data=data_experiment["df"],

treatment=data_experiment["treatment_name"], outcome=data_experiment["outcome_name"],

graph=data_experiment["gml_graph"])

identified_estimand_experiment = model_experiment.identify_effect(proceed_when_unidentifiable=True)

X0 X1 X2 X3 X4 Z0 Z1 \

0 0.129227 0.510094 -1.475240 -0.219903 1.758580 0.0 0.019989

1 0.129312 -1.265757 -0.927238 -0.617949 -0.526980 0.0 0.533503

2 1.465955 0.513754 0.600330 -0.991137 -0.343302 1.0 0.041271

3 -0.848521 -0.300619 -0.219193 -0.796686 0.059552 1.0 0.007219

4 0.639915 1.777497 0.753570 0.447037 0.221725 0.0 0.246742

... ... ... ... ... ... ... ...

9995 0.993821 0.476915 -1.997253 1.624126 -0.839789 0.0 0.339309

9996 -0.704006 0.065800 0.758941 1.907440 1.512859 0.0 0.592743

9997 1.980971 1.174533 0.204603 -0.773177 0.440402 1.0 0.221890

9998 0.008317 -0.227060 1.315543 0.992438 2.514962 1.0 0.756688

9999 1.202715 0.530296 -0.650898 -0.748343 1.811226 0.0 0.825335

W0 W1 W2 W3 W4 v0 y

0 -2.455495 0.496016 2.374215 0.096688 -0.784725 0 4.121399

1 -1.231912 -1.014431 -0.961038 -1.222188 -0.131987 0 -8.610506

2 0.896354 -0.232496 1.631606 0.133437 0.194136 1 19.346896

3 -1.965647 1.090449 1.785160 -1.960019 -1.361789 1 7.154199

4 1.736414 -0.640084 -0.625175 -0.821564 0.498603 1 16.337337

... ... ... ... ... ... .. ...

9995 -0.594311 -1.394031 1.495237 -1.488875 -0.615948 0 -3.693408

9996 -0.345999 -1.243541 -1.070301 0.570454 1.844072 1 13.746460

9997 -0.760516 0.662359 0.657406 -0.077945 -0.547748 1 21.460885

9998 0.886693 0.759553 0.980638 -2.793115 1.584166 1 25.485743

9999 0.831213 -0.392465 1.755727 0.407155 0.179335 1 24.272205

[10000 rows x 14 columns]

[18]:

from sklearn.ensemble import RandomForestRegressor

metalearner_estimate = model_experiment.estimate_effect(identified_estimand_experiment,

method_name="backdoor.econml.metalearners.TLearner",

confidence_intervals=False,

method_params={"init_params":{

'models': RandomForestRegressor()

},

"fit_params":{}

})

print(metalearner_estimate)

print("True causal estimate is", data_experiment["ate"])

WARNING:dowhy.causal_estimator:Concatenating common_causes and effect_modifiers and providing a single list of variables to metalearner estimator method, TLearner. EconML metalearners accept a single X argument.

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W3,W4,W0,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W4,W0,W2,W1,U) = P(y|v0,W3,W4,W0,W2,W1)

## Realized estimand

b: y~v0+X3+X1+X2+X0+X4+W3+W4+W0+W2+W1

Target units: ate

## Estimate

Mean value: 15.263186238991091

Effect estimates: [[15.79690208]

[ 5.37643925]

[16.59310522]

...

[20.8231476 ]

[23.31454111]

[21.81387794]]

True causal estimate is 13.921783106444051

Avoiding retraining the estimator

Once an estimator is fitted, it can be reused to estimate effect on different data points. In this case, you can pass fit_estimator=False to estimate_effect. This works for any EconML estimator. We show an example for the T-learner below.

[19]:

# For metalearners, need to provide all the features (except treatmeant and outcome)

metalearner_estimate = model_experiment.estimate_effect(identified_estimand_experiment,

method_name="backdoor.econml.metalearners.TLearner",

confidence_intervals=False,

fit_estimator=False,

target_units=data_experiment["df"].drop(["v0","y", "Z0", "Z1"], axis=1)[9995:],

method_params={})

print(metalearner_estimate)

print("True causal estimate is", data_experiment["ate"])

*** Causal Estimate ***

## Identified estimand

Estimand type: EstimandType.NONPARAMETRIC_ATE

### Estimand : 1

Estimand name: backdoor

Estimand expression:

d

─────(E[y|W3,W4,W0,W2,W1])

d[v₀]

Estimand assumption 1, Unconfoundedness: If U→{v0} and U→y then P(y|v0,W3,W4,W0,W2,W1,U) = P(y|v0,W3,W4,W0,W2,W1)

## Realized estimand

b: y~v0+X3+X1+X2+X0+X4+W3+W4+W0+W2+W1

Target units: Data subset provided as a data frame

## Estimate

Mean value: 18.01798358591133

Effect estimates: [[ 7.2915195 ]

[23.13507182]

[12.07144299]

[27.61115405]

[19.98072957]]

True causal estimate is 13.921783106444051

Refuting the estimate

Adding a random common cause variable

[20]:

res_random=model.refute_estimate(identified_estimand, dml_estimate, method_name="random_common_cause")

print(res_random)

Refute: Add a random common cause

Estimated effect:11.992439117764018

New effect:12.006660844848646

p value:0.76

Adding an unobserved common cause variable

[21]:

res_unobserved=model.refute_estimate(identified_estimand, dml_estimate, method_name="add_unobserved_common_cause",

confounders_effect_on_treatment="linear", confounders_effect_on_outcome="linear",

effect_strength_on_treatment=0.01, effect_strength_on_outcome=0.02)

print(res_unobserved)

Refute: Add an Unobserved Common Cause

Estimated effect:11.992439117764018

New effect:12.024266312057163

Replacing treatment with a random (placebo) variable

[22]:

res_placebo=model.refute_estimate(identified_estimand, dml_estimate,

method_name="placebo_treatment_refuter", placebo_type="permute",

num_simulations=10 # at least 100 is good, setting to 10 for speed

)

print(res_placebo)

WARNING:dowhy.causal_refuter:We assume a Normal Distribution as the sample has less than 100 examples.

Note: The underlying distribution may not be Normal. We assume that it approaches normal with the increase in sample size.

Refute: Use a Placebo Treatment

Estimated effect:11.992439117764018

New effect:0.01626382750118571

p value:0.3933780406802491

Removing a random subset of the data

[23]:

res_subset=model.refute_estimate(identified_estimand, dml_estimate,

method_name="data_subset_refuter", subset_fraction=0.8,

num_simulations=10)

print(res_subset)

WARNING:dowhy.causal_refuter:We assume a Normal Distribution as the sample has less than 100 examples.

Note: The underlying distribution may not be Normal. We assume that it approaches normal with the increase in sample size.

Refute: Use a subset of data

Estimated effect:11.992439117764018

New effect:11.991376826422218

p value:0.4869453171367034

More refutation methods to come, especially specific to the CATE estimators.