dodiscover.toporder.SCORE#

- class dodiscover.toporder.SCORE(eta_G=0.001, eta_H=0.001, alpha=0.05, prune=True, n_splines=10, splines_degree=3, estimate_variance=False, pns=False, pns_num_neighbors=None, pns_threshold=1)[source]#

The SCORE algorithm for causal discovery.

SCORE [1] iteratively defines a topological ordering finding leaf nodes by comparison of the variance terms of the diagonal entries of the Hessian of the log likelihood matrix. Then it prunes the fully connected DAG representation of the ordering with CAM pruning [2]. The method assumes Additive Noise Model and Gaussianity of the noise terms.

- Parameters:

- eta_G: float, optional

Regularization parameter for Stein gradient estimator, default is 0.001.

- eta_H

float, optional Regularization parameter for Stein Hessian estimator, default is 0.001.

- alpha

float, optional Alpha cutoff value for variable selection with hypothesis testing over regression coefficients, default is 0.05.

- prunebool, optional

If True (default), apply CAM-pruning after finding the topological order.

- n_splines

int, optional Number of splines to use for the feature function, default is 10. Automatically decreased in case of insufficient samples

- splines_degree: int, optional

Order of spline to use for the feature function, default is 3.

- estimate_variancebool, optional

If True, store estimates the variance of the noise terms from the diagonal of Stein Hessian estimator. Default is False.

- pnsbool, optional

If True, perform Preliminary Neighbour Search (PNS) before CAM pruning step, default is False. Allows scaling CAM pruning and ordering to large graphs.

- pns_num_neighbors: int, optional

Number of neighbors to use for PNS. If None (default) use all variables.

- pns_threshold: float, optional

Threshold to use for PNS, default is 1.

Notes

Prior knowledge about the included and excluded directed edges in the output DAG is supported. It is not possible to provide explicit constraints on the relative positions of nodes in the topological ordering. However, explicitly including a directed edge in the DAG defines an implicit constraint on the relative position of the nodes in the topological ordering (i.e. if directed edge

(i,j)is encoded in the graph, nodeiwill precede nodejin the output order).References

Methods

hessian(X, eta_G, eta_H)Stein estimator of the Hessian of log p(x).

hessian_diagonal(X, eta_G, eta_H)Stein estimator of the diagonal of the Hessian matrix of log p(x).

learn_graph(data_df, context)Fit topological order based causal discovery algorithm on input data.

prune(X, A_dense, G_included, G_excluded)Prune the dense adjacency matrix

A_densefrom spurious edges.score(X, eta_G[, K, nablaK])Stein gradient estimator of the score, i.e. gradient log p(x).

- hessian(X, eta_G, eta_H)#

Stein estimator of the Hessian of log p(x).

The Hessian matrix is efficiently estimated by exploitation of the Stein identity. Implements [1].

- Parameters:

- X

np.ndarrayof shape (n_samples, n_nodes) I.i.d. samples from p(X) joint distribution.

- eta_G: float

regularization parameter for ridge regression in Stein gradient estimator.

- eta_H: float

regularization parameter for ridge regression in Stein hessian estimator.

- X

- Returns:

- H

np.ndarray Stein estimator of the Hessian matrix of log p(x).

- H

References

- hessian_diagonal(X, eta_G, eta_H)#

Stein estimator of the diagonal of the Hessian matrix of log p(x).

- Parameters:

- X

np.ndarray(n_samples, n_nodes) I.i.d. samples from p(X) joint distribution.

- eta_G: float

regularization parameter for ridge regression in Stein gradient estimator.

- eta_H: float

regularization parameter for ridge regression in Stein hessian estimator.

- X

- Returns:

- H_diag

np.ndarray Stein estimator of the diagonal of the Hessian matrix of log p(x).

- H_diag

- learn_graph(data_df, context)#

Fit topological order based causal discovery algorithm on input data.

- Parameters:

- data_df

pd.DataFrame Datafame of the input data.

- context: Context

The context of the causal discovery problem.

- data_df

- prune(X, A_dense, G_included, G_excluded)#

Prune the dense adjacency matrix

A_densefrom spurious edges.Use sparse regression over the matrix of the data

Xfor variable selection over the edges in the dense (potentially fully connected) adjacency matrixA_dense- Parameters:

- X

np.ndarrayof shape (n_samples, n_nodes) Matrix of the data.

- A_dense

np.ndarrayof shape (n_nodes, n_nodes) Dense adjacency matrix to be pruned.

- G_included

nx.DiGraph Graph with edges that are required to be included in the output. It encodes assumptions and prior knowledge about the causal graph.

- G_excluded

nx.DiGraph Graph with edges that are required to be excluded from the output. It encodes assumptions and prior knowledge about the causal graph.

- X

- Returns:

- A

np.ndarray The pruned adjacency matrix output of the causal discovery algorithm.

- A

- score(X, eta_G, K=None, nablaK=None)#

Stein gradient estimator of the score, i.e. gradient log p(x).

The Stein gradient estimator [3] exploits the Stein identity for efficient estimate of the score function.

- Parameters:

- X

np.ndarrayof shape (n_samples, n_nodes) I.i.d. samples from p(X) joint distribution.

- eta_G: float

regularization parameter for ridge regression in Stein gradient estimator.

- K

np.ndarrayof shape (n_samples, n_samples) Gaussian kernel evaluated at X, by default None. If

Kis None, it is computed inside of the method.- nablaK

np.ndarrayof shape (n_samples, ) <nabla, K> evaluated dot product, by default None. If

nablaKis None, it is computed inside of the method.

- X

- Returns:

- G

np.ndarray Stein estimator of the score function.

- G

References

Examples using dodiscover.toporder.SCORE#

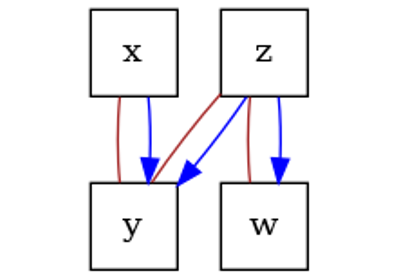

Order-based algorithms for causal discovery from observational data without latent confounders

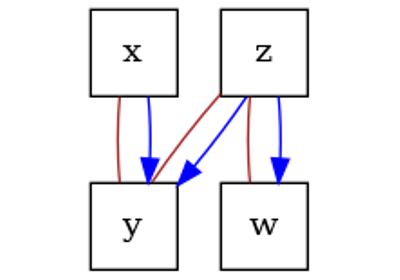

Using prior knowledge in order-based algorithms for causal discovery